In this project, I have automatically merged two photos into a panoramic image based on the paper "Multi-Image Matching using Multi-Scale Oriented Patches" by Brown et al.

1. Corner Detection with Harris Function

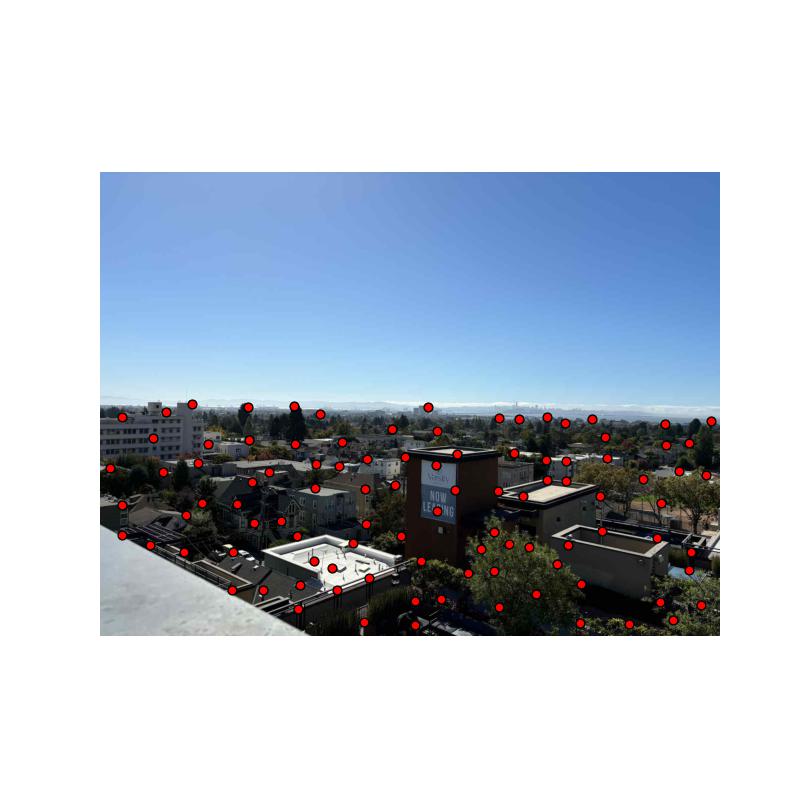

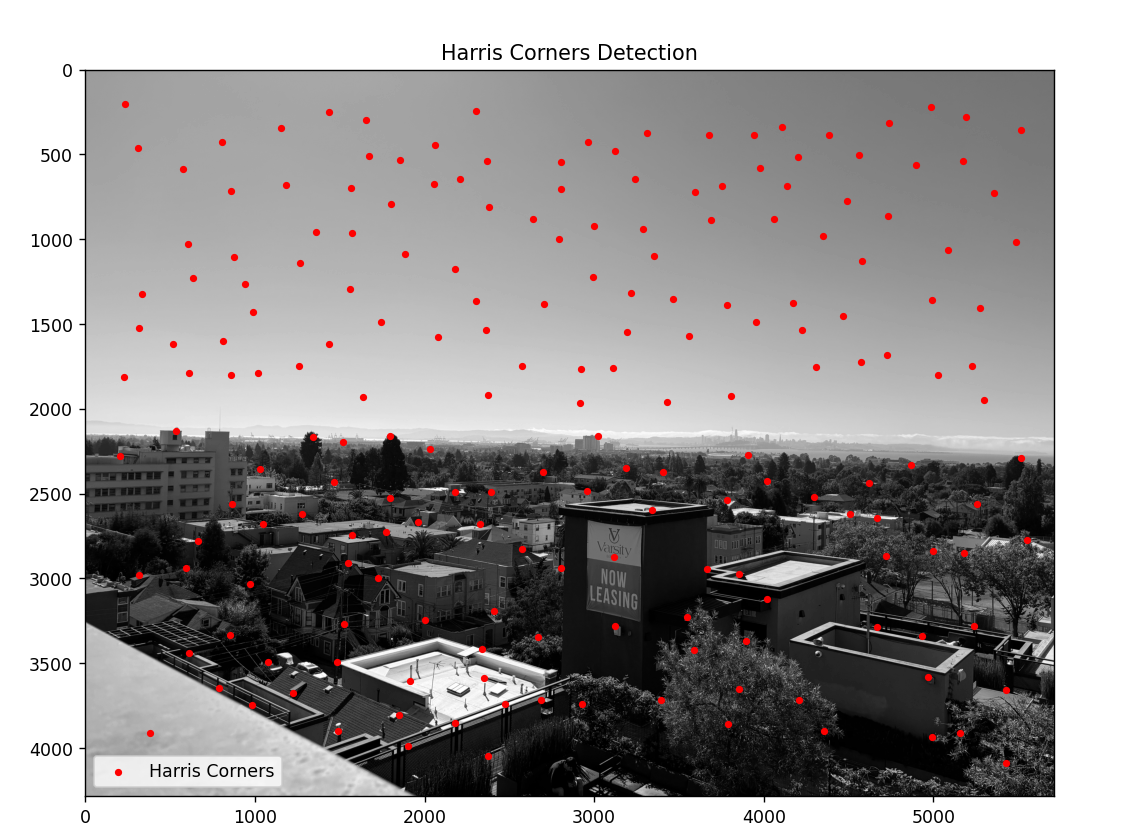

First, I applied the Harris function to extract corner points from both images. The results are as follows:

Figure 1: Corner detection on Image 1.

Figure 2: Corner detection on Image 2.

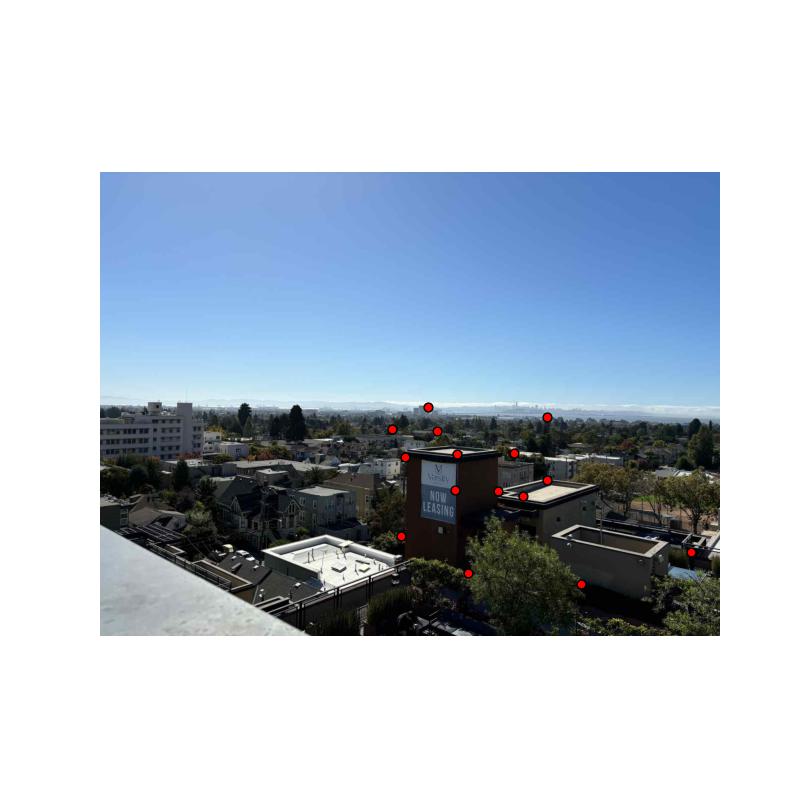

2. Adaptive Non-Maximal Suppression (ANMS)

To prevent the corner points from being too densely clustered and to ensure they are evenly distributed across the images, I used the ANMS method mentioned in the paper. This involves increasing the radius for finding local maxima until an appropriate number of corner points is obtained. The results are as follows:

Figure 3: ANMS applied on Image 1.

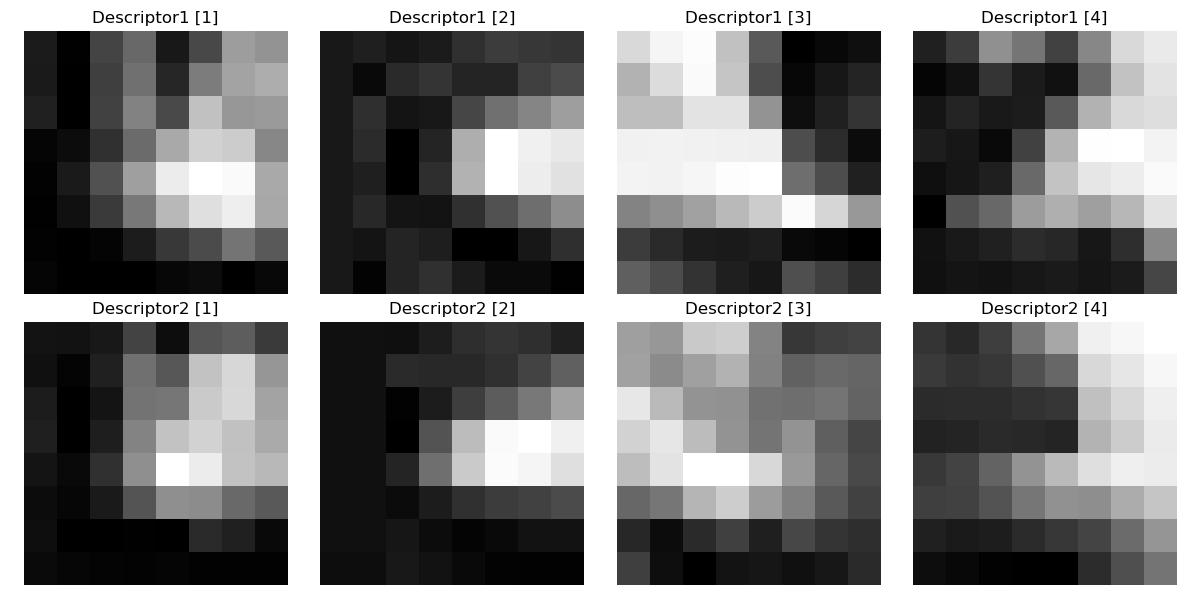

3. Descriptor Extraction

I extracted descriptors for each corner point by sampling an 8×8 patch around them. Each corner point thus obtains its own descriptor. The results are as follows (8 patches are randomly chosen):

Figure 4: Sample descriptors from Image 1.

4. Corner Point Matching

Using the obtained descriptors, I matched corner points by finding the closest descriptor in the set of corner point patches from the other image. A match is considered successful only if the distance to the nearest neighbor is less than a certain threshold compared to the distance to the second-nearest neighbor (based on the ratio test). The matching results are as follows:

Figure 5: Matched corner points between the two images.

5. Applying RANSAC and Image Blending

I applied the RANSAC algorithm to find the optimal transformation matrix, iterating 1,000 times. Then, I transformed one image and stitched it onto the other to find the correct alignment. For blending, I used Laplacian pyramid blending to merge the images layer by layer. The final results are as follows:

Figure 6: Inliers of RANSAC.

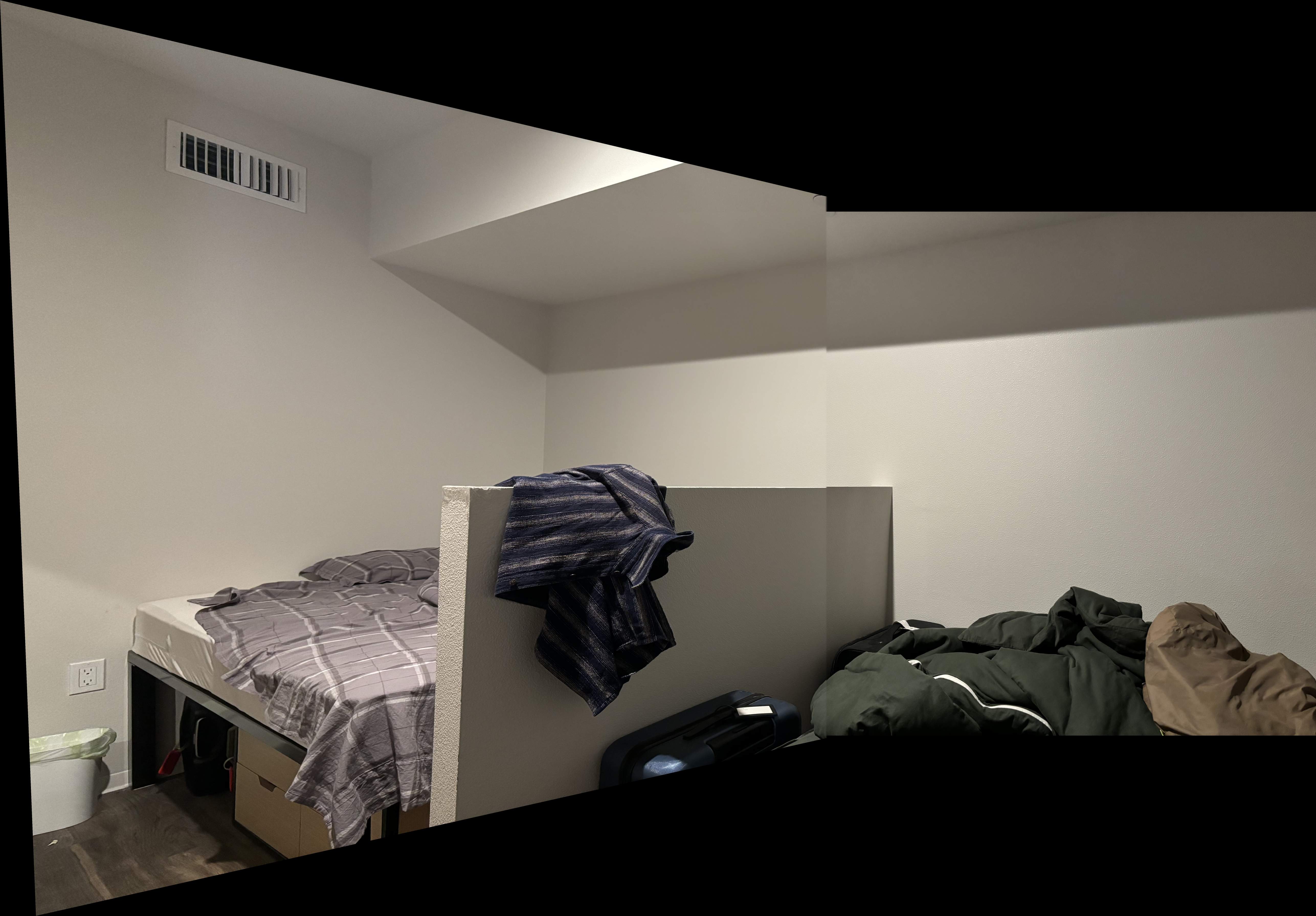

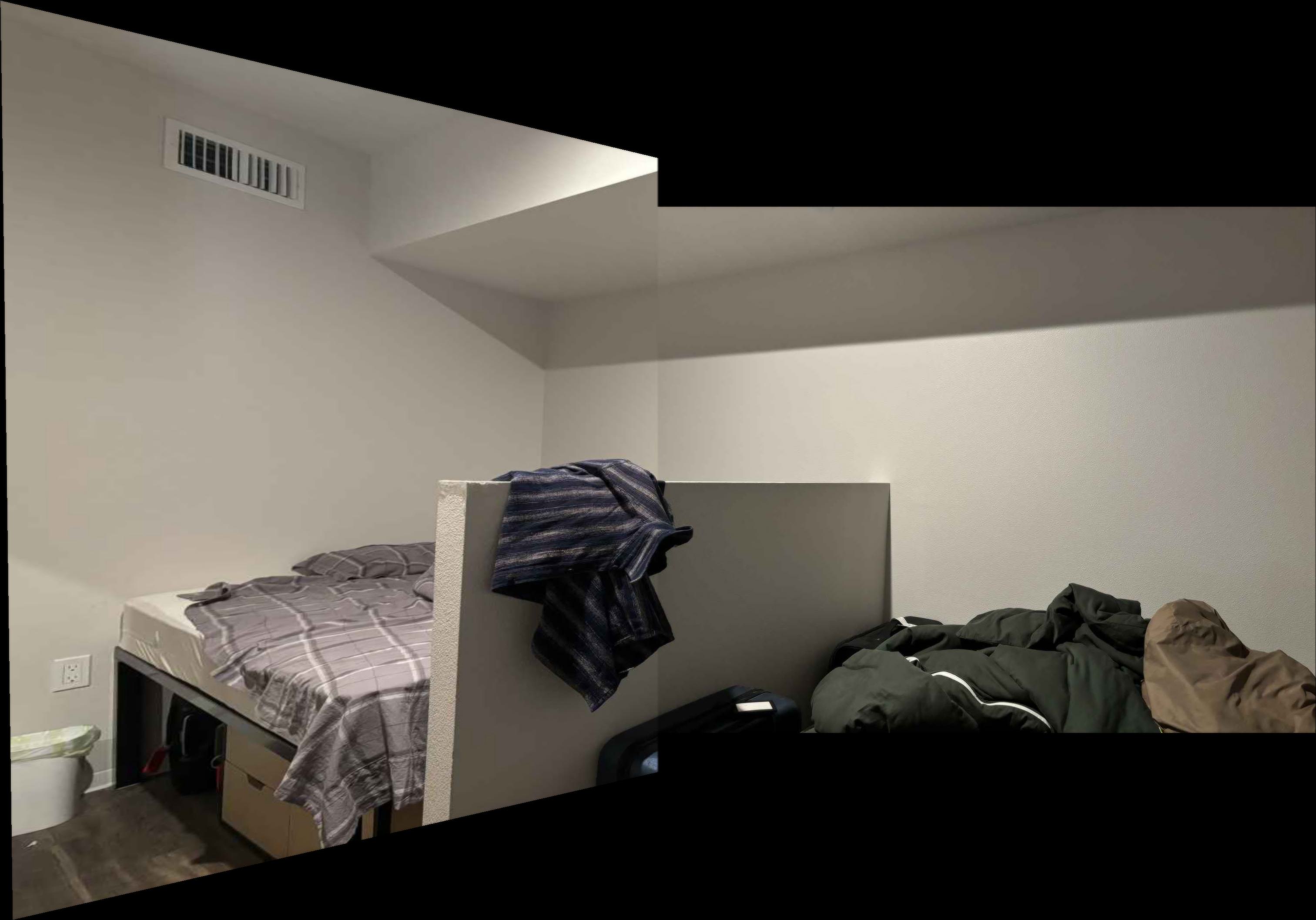

Figure 7: Left - Manually aligned image (Project 4a). Right - Auto-aligned image.

Additional Results

Here are some additional results:

Figure 8: Comparison between manually aligned and auto-aligned images.

Figure 9: Further blending results.